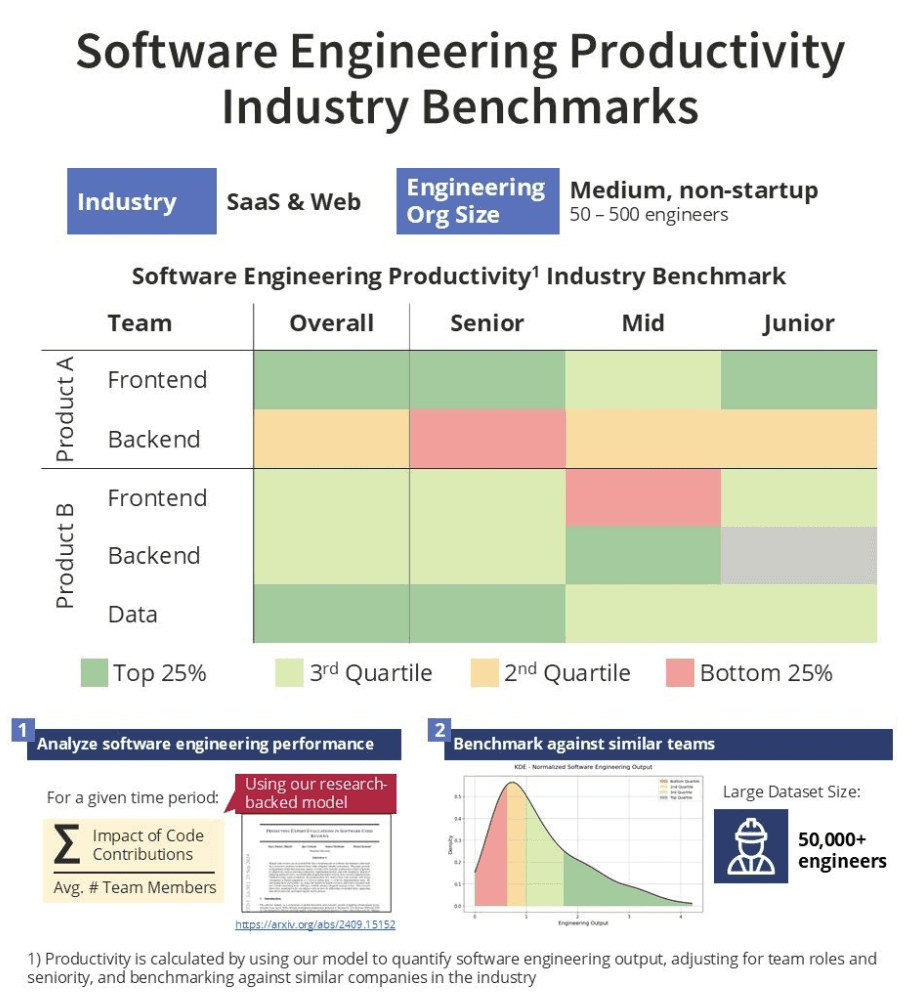

We've been conducting productivity benchmarks for companies more frequently and would love to understand your perspectives on their use cases, benefits, and potential pitfalls.

Below is a sanitized example from a medium-sized company in the SaaS and web space.

I view these benchmarks as diagnostic tools. They highlight issues that need fixing and identify top-performing teams whose best practices can be shared across the organization.

A team's placement in the bottom quartile doesn't mean they lack potential; it just means they're currently underperforming and that you can help them.

Their underperforming could be due to factors beyond the team's control, such as:

-Unclear product requirements

-Excessive meetings

-Being pulled into other projects or initiatives

-Slow or unreliable CI/CD pipelines

-Unstable development environments

-Dependency issues and knowledge silos

-Overly complex architecture requiring extensive investigation before changes

It could also be that the team:

-Has low potential and skill but is performing at their maximum capacity

-Has low potential and skill and isn't reaching even that potential

We calculate productivity by using our model to quantify software engineering output, adjusting for team roles and seniority, and benchmarking against similar companies in the industry.

Think of our model as performing the work of a panel of 10 independent software engineering experts who manually evaluate each commit across different dimensions to arrive at a productivity quantification (output units).

A panel of 10 experts would be slow, unscalable, and prohibitively expensive. Our model highly correlates with human expert assessments but delivers ratings in a fraction of the time and cost.

You can’t build high-performing software teams on intuition and politics–it simply doesn’t scale. Like it or not, data-driven decision-making in software engineering is essential and here to stay.

A chart like this might seem reductive, but it offers critical clarity. By anchoring discussions in objective data, it shifts the focus away from politics and subjective guesswork. This is crucial because, let’s be honest, politics is one of the worst parts of software engineering… and “intuition” is often clouded by personal biases or agendas (we’re all human, it’s normal).

And here’s the hard truth: using game theory, it’s rarely in a software engineering manager’s best interest to admit their team is underperforming–let alone that it’s their fault. Without extraordinary trust, the risks outweigh the benefits, as such honesty could lead to blame or career repercussions rather than constructive solutions.

Objective data changes the conversation. It’s not about blame; it’s about uncovering the truth and solving problems.