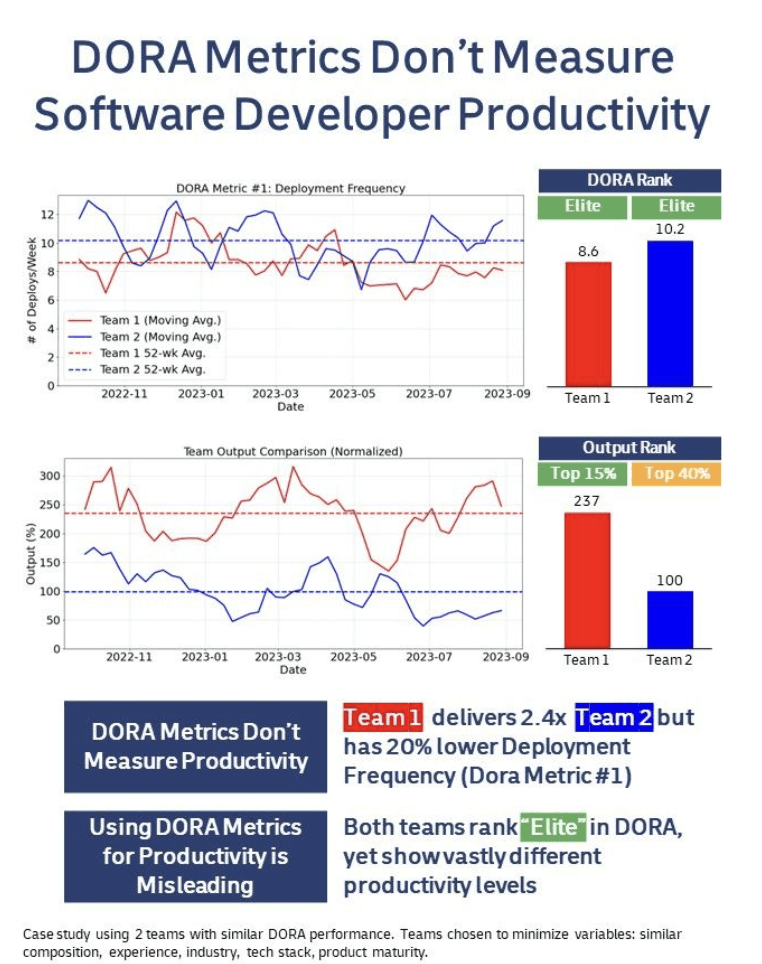

We illustrate this with a case study of two similar teams: both deploy multiple times daily and rank "Elite" by DORA standards, but one produces 2.4x more output (ie. has 2.4x more productivity).

When evaluated using our productivity algorithm, Team 2 shows a “top 40%” productivity level - closer to “Average” rather than “Elite”.

Even if we remove our algorithm from the equation, using deployment frequency as a measure of productivity is flawed:

1) Deployment sizes aren’t constant within & across teams. Is a team that deploys 4 times a week *always* better than one that deploys 5 times a week? How much better?

2) Deployment frequency is gameable. Once you start measuring your teams using this, they’ll be incentivized to reduce the size of each deployment. Trivial updates might become the norm. You can easily improve DORA metrics without improving your productivity.

To draw an analogy from my experience as an ex-competitive Olympic weightlifter, using DORA for productivity is like tracking gym visits without considering the weights lifted.

Are DORA metrics still useful?

- Sure, there’s value in them. But they’re not a productivity metric and shouldn’t be used as such.

Why did people start using DORA as a productivity metric?

- Historically, as teams transitioned from waterfall with quarterly deployments to agile with multiple weekly deployments, deployment frequency seemed to correlate with productivity.

- A team deploying several times a week often outperformed one deploying once a quarter.

- With the absence of a robust productivity metric in software engineering, DORA metrics gradually became a stand-in for measuring productivity.

How do we measure developer productivity in this case study?

Our algorithm measures developer productivity by analyzing the functionality (ie. what the code does) of code changes in Git commits. It considers over 30+ codebase dimensions and has been calibrated using data from millions of files in more than 10 programming languages. This approach enables us to quantify the impact of each commit and, by combining it with Git metadata, we can provide a comprehensive measure of both individual and team productivity.

About Our Mission

- We are conducting research at Stanford focused on quantifying software engineering productivity.

- Our objective is to enable engineering teams to base decisions on factual data, rather than on gut feelings and internal politics.

- Our research participants use our algorithm to make decisions about their team’s performance, outsourcing, work methods (home vs office), etc.